Part 3: Precision vs Probability. Testing Limits

In this world of getting the correct answer, there’s an assumption that getting correct answers is what computers necessarily do. We have come to see computers as rigid deliverers of objective accuracy. At the end of the day, a computer’s answers are founded on “bits.” 010101. Either yes or no. It is intuitive to the human mind to believe that with only the options of yes/no, on/off, and 0/1, there is inevitably only correct and incorrect.

None of us would be using accounting software if they advertised that they were correct 90% of the time. We are not interested in probability when determining how much money we have in our bank account to pay our bills or make payroll. We need precise answers.

When someone says AI hallucinated, the founding assumption is that AI would be accurate because it is a computer system. But that is not necessarily the case.

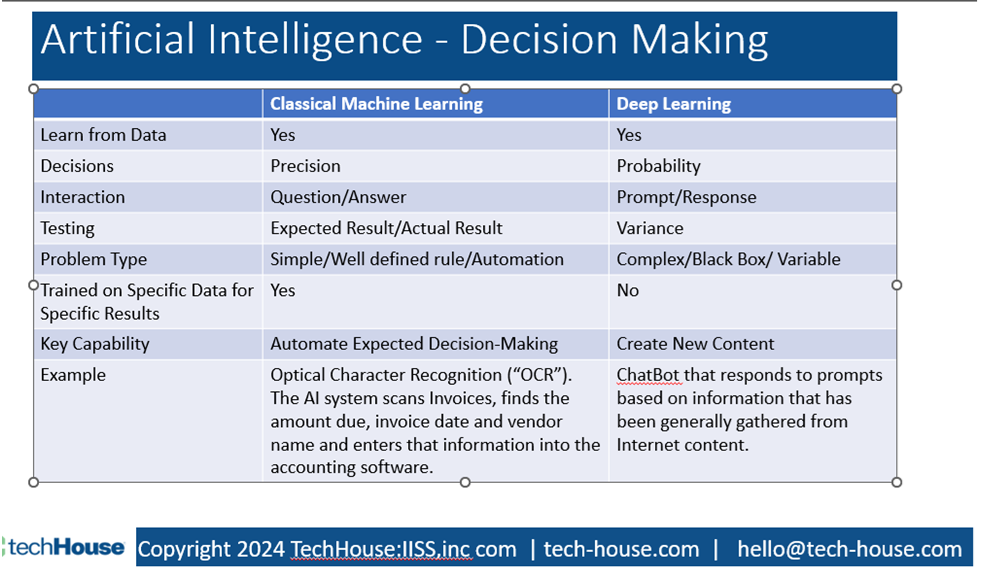

AI models make decisions based on their training. Like us, there is a probability that the decision will not be correct. There are two types of AI models. Classical Machine Learning, like our OCR example above, and Deep Learning.

The chat/prompt AI tools are great examples of Deep Learning AI models. These AI neural networks consume a tremendous volume of information, such as the 40 years of digitized information on the Internet. The AI neural network absorbs that information and uses it to respond to the requests it receives. It “understands” language using “Large Language Models.” Notably, the terms used to interact with Chat tools are prompt and response, not question and answer. The response from the AI neural network is built based on the probability of being correct based on the information it has absorbed.

Unlike the OCR invoice scenario above, it is doubtful the AI Neural Network in your chat bot has been trained on your specific prompt. Instead, based on all it has absorbed, it will respond with the most probable answer. Although various AI models will be tested and trained over time to increase accuracy, the sheer complexity of a neural network trained on the Internet’s vast data stores makes it impossible to test each condition or even a significant portion of them.

It reminds me of a quote from the movie Armageddon:

President: What is this thing?

Truman: It’s an asteroid, sir.

President: How big are we talking?

Scientist: Sir, our best estimate is 97.6 billion…

Truman: It’s the size of Texas, Mr. President.

President: Dan, we didn’t see this thing coming?

Truman: Well, our object collison budget’s about a million dollars a year. That allows us to track about 3% of the sky, and begging your pardon sir, but it’s a big-ass sky.

President: Is this, going to hit us?

Truman: We’re efforting that as we speak sir.

President: What kind of damage?

Truman: Damage? A total, sir. It’s what we call a global killer. The end of mankind. Doesn’t matter where it hits, nothing would survive, not even bacteria.

President: My God. What do we do?

A trained model for a specific task will be precise and accurate in a way that the neural network with a chat large language model) prompt and response cannot. An AI Neural Network absorbs a tremendous volume of information, and then it will use probability based on what it’s read and what it thinks might be an answer to whatever question you have. The critical distinction here is that the prompt and response are not system-tested.

So why would anyone use software that does not feature accuracy as its main feature? When working with Deep Learning AI, we must consider probability and likelihood. Is a Deep Learning foundational model without specific training on my prompt the right tool to decide how to handle an employee issue? How to write an email, even? No.

When is Deep Learning best?

When do we think about neural network prompts and responses in our business? When we can improve our capabilities and augment human capabilities. Rather than automation, it’s collaboration.

We will explore collaboration with AI in our next blog post.

Don’t Go It Alone: Some ways TechHouse can help

- Free Webinars to stay aware: Contact us for our upcoming webinars.

- Check out Kathy’s AI panel on Bright Talk June 21st, 2024, at 1pm eastern.

- Our CoPilot AwareTM Solution contains curated assessments, sample policies, communications, and guides for your AI Adoption journey.

- Training and mentoring for you and your team: from Cybersecurity to Critical thinking workshops, our team is dedicated to transferring skills to help your team thrive in this new world.

- Technical Preparedness and Tools: Engage us for an AI preparation, Data Governance, cybersecurity, or CoPilot/AI rollout in your organization.